What is AI?

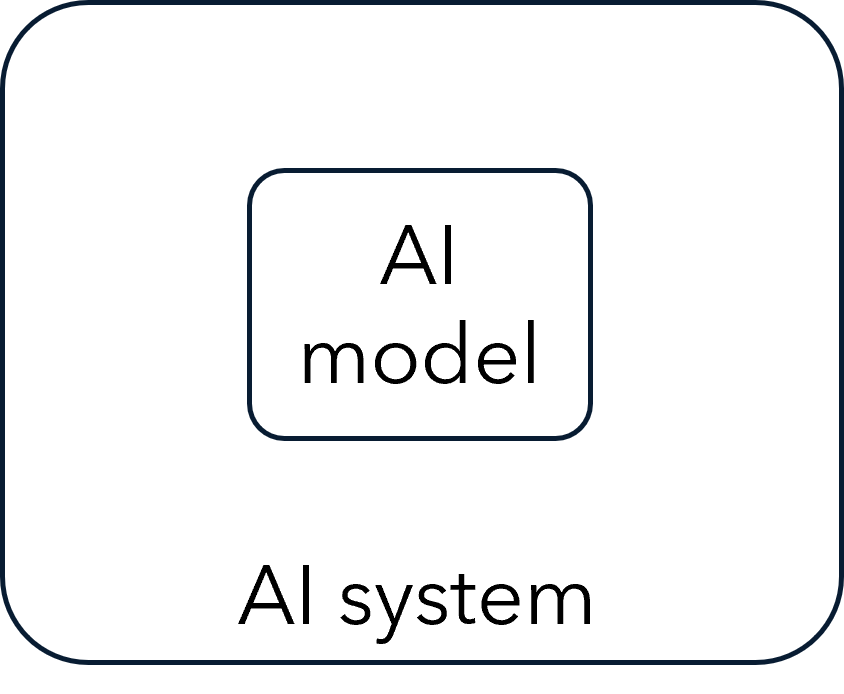

AI stands for artificial intelligence, which is a very broad term represents a combination of mathematical model and engineering software to automatically perform tasks that typically require human intelligence such as recognizing speech, making decisions, or identifying patterns. Common AI models usually consist of computer vision, natural language processing, and more, that can be used for various purposes such as entertainment, education, health, cyber security, and more. AI models aren't necessarily complex or extraordinary. They can be as straightforward as just two elements: a model file and a program to execute the model. AI system can range from a simple setup, like using an inference AI model for basic tasks, to a highly intricate system. In more complex scenarios, it involves numerous components in addition to the AI model, such as preprocessing, guardrails, planning, memorization, postprocessing, and more.

What is AI Risks?

AI model/system can be deployed on a large scale and influence a lot of people. However, AI models/systems can make mistakes. AI Risk refers to the potential dangers or negative consequences associated with the development and deployment of artificial intelligence technologies. These risks can be diverse and multifaceted, ranging from immediate practical concerns to long-term existential threats. Some key aspects of AI risk include:

- Misuse of AI: This involves the intentional use of AI systems for harmful purposes, such as in autonomous weapons, surveillance, or for conducting cyber-attacks.

- Unintended Consequences: AI systems might produce unexpected results due to flaws in their design, programming errors, or unforeseen interactions with other systems or environmental factors.

- Bias and Discrimination: AI systems can perpetuate or even exacerbate biases if they are trained on biased data, leading to unfair or discriminatory outcomes, particularly in sensitive areas like hiring, law enforcement, and loan approvals.

- Loss of Privacy: The PII data can be misused in the AI model training without the owner’s consent or can be leaked without proper management.

- Job Displacement: Automation powered by AI could lead to significant job losses in various sectors, raising concerns about economic inequality and social disruption.

- Lack of Accountability and Transparency: Decisions made by AI systems can be opaque and not easily understandable by humans, leading to issues with accountability, especially in critical areas like healthcare or criminal justice.

- Dependence and Control: Over-reliance on AI could lead to a loss of human skills and agency, and in extreme cases, there's the concern of AI systems becoming uncontrollable or making decisions that are not aligned with human values and interests.

- Existential Risks: In the long term, there is a concern that superintelligent AI systems could pose existential threats to humanity if their goals are not aligned with human values and interests.

DALL-E

DALL-E